Python - Basic Data Operations

String and Pandas DataFrame Methods with Examples

A content site has a 2-stage sub-targeting from the first moment it meets its target audience (new users doing relevant searches); to increase the number of new visitors and to revisit (return) new visitors. These sub-goals also necessitate the production of new content and the regular development of existing content.

Follow-up of the contents and applying the necessary optimization-development processes should be regularly and iteratively handled. Otherwise, the contents begin to be divided into 3 groups over time; featured content, content without visitors, in-between. In this case, It may be a big issue when technical problems and/or algorithm updates affect the featured contents. Because the change also significantly affects the average page views of the site. Content without visitors only generates loads. The contents between featured and non-visited are mostly related to long-tail keywords, although they show small positive and negative changes in competition (on-site and off-site).

A content publisher should regularly optimize content in content-visitor and content-search engine contexts. The links in the article may have become dysfunctional over time, problems with the images and JavaScript codes in the page may have appeared there, etc.

In this article, as a continuation of the previous article titled Google Analytics and Search Console API Operations with Python, I will try to examine how we can get an idea about the content by using the Pandas library offered within the framework of the Python programming language and a few string methods. I will use Pandas methods to practice as much as possible. If I can find more functional and suitable solutions in some parts over time, I may change the code. In that case, I'll make sure to keep the old solutions as comments.

Previously, I got some information about the pages viewed between a date range via the Google Analytics Reporting API in the previous article. Here, displayed refers to the process by which the Google Analytics tracking code runs and the page-related data is transmitted to the Google Analytics server. By default, pages are handled on a URL basis1.

document.title

document.locationOf course, any customized information can also be transmitted to Google servers via JavaScript and/or the measurement protocol.

In addition to this information, different sources such as social media platforms, advertising channels, and e-mail newsletters will add various query parameters to the URL, or visitors from different countries can translate the page title and content into different languages with Google Translate and similar tools like these, and many other reasons will create outliers for the pages.

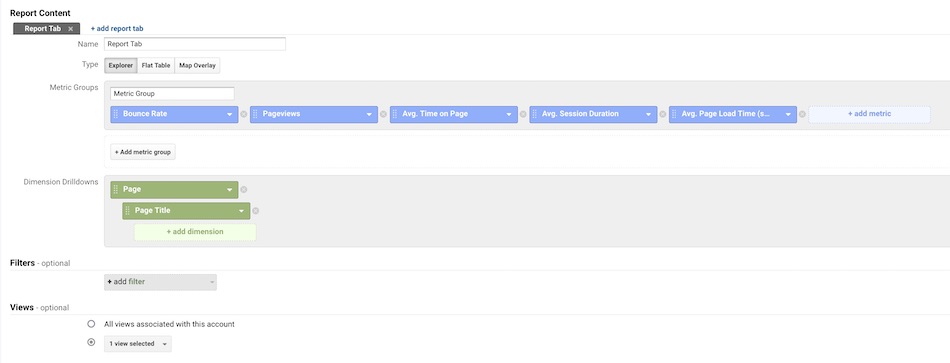

First, let's create a customized Google Analytics report that includes the relevant metrics and dimensions. We can access this report anytime and share it with anyone easily.

Cleaning Data with Pandas and String Methods

I will try to use Python Pandas methods whenever possible to practice the following operations. In some cases, I will also include additional examples about the string methods. So let's start step by step.

I will use the Google Analytics pageview data we created in the Google Analytics and Search Console API Operations with Python.

Our population size is 5939. But when we've looked at the sitemap, the expected number should have been around 560. So, how can we explain this difference?

import pandas as pd

df = pd.read_csv('GA-2020-05-30.csv')

# df.dtypes

# df.isna().any()

df.info()| Column | Type |

|---|---|

| pagePath | object |

| pageTitle | object |

| bounceRate | float64 |

| pageViews | int64 |

| avgTimeOnPage | float64 |

| avgSessionDuration | float64 |

| avgPageLoadTime | float64 |

| dtype: object |

Let's take a look at the statistics in the next step.

df.describe()| bounceRate | pageViews | avgTimeOnPage | avgSessionDuration | avgPageLoadTime | |

|---|---|---|---|---|---|

| count | 5939.000000 | 5939.000000 | 5939.000000 | 5939.000000 | 5939.000000 |

| mean | 47.068327 | 67.850985 | 133.914575 | 56.241569 | 2.157740 |

| std | 42.735453 | 287.962893 | 225.226011 | 251.591516 | 4.802585 |

| min | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 |

| 25% | 0.000000 | 1.000000 | 0.000000 | 0.000000 | 0.000000 |

| 50% | 47.500000 | 3.000000 | 17.000000 | 0.000000 | 0.000000 |

| 75% | 94.682411 | 25.000000 | 194.254808 | 44.715433 | 3.656950 |

| max | 105.555556 | 7511.000000 | 2541.000000 | 10531.000000 | 113.786000 |

Let's look at the head, tail, and sample parts of the page data.

df.head(10)

df.tail(10)

df.sample(10)This summarized information shows there are some observations close to 0 and 100 in the bounceRate column. These two values are unlikely under normal conditions. Therefore, it will be necessary to look at them closely in the next step. Likewise, it can be seen that there are similar situations in pageViews and other areas.

Looking at the pagePath field in relation to numeric values, it is possible to see some clues about the cause of numeric inconsistencies that can be considered a problem. For example, when selected and queried a page such as /en/api-whats/, it can be seen that there are many related values belonging to the same page.

df[df.apply(lambda r: r.str.contains('api-nedir', case=False).any(), axis=1)] | pagePath |

|---|

| ... |

| /tr/api-nedir |

| /tr/api-nedir/ |

| /tr/posts/api-nedir/ |

| /tr/posts/api-nedir |

| /tr/posts/2017/07/api-nedir |

| /tr/posts/2017/07/api-nedir/ |

| ... |

Also, inconsistency can be seen due to the path differences. For example, various queries take place as different values because of the hashes, parameters, lang definitions, etc. In this case, we can remove the relevant fields or the / sign at the end of the queries.

# df[df.apply(lambda p: p.str.endswith('/').any(), axis=1)] # Tüm alanları aramaya dahil etmek için

# df.loc[[p.endswith('/') for p in df.pagePath]] # Belirli bir sütunda işlem yapmak için

df.loc[df.PagePath.str.contains('/api-nedir', case=False, regex=False)]Most of the time, choosing the removal process will not be right because changes to path may have been done with a purpose over time. So let's go through the 2nd way.

# df.pagePath = df.pagePath.apply(lambda x: x.split('?')[0].rstrip('/'))

df.pagePath = [p.split('?')[0].rstrip('/') for p in df.pagePath]After this step, duplicate data will be removed. Also, rows that are deemed unnecessary can be deleted or merged into a single row with sums, means, etc. Or, data below a certain value (for example, median or mean) can be excluded from the analysis process.

If we are going to do mathematical calculations, let's make numeric values easy to calculate.

#df.bounceRate = [round(p) for p in df.bounceRate]

#df.avgTimeOnPage = [round(p) for p in df.avgTimeOnPage]

#df.avgSessionDuration = [round(p) for p in df.avgSessionDuration]

#df.avgPageLoadTime = [round(p) for p in df.avgPageLoadTime]

df.bounceRate = df.bounceRate.map(round) # percentage

df.avgTimeOnPage = df.avgTimeOnPage.map(round) # seconds

df.avgSessionDuration = df.avgSessionDuration.map(round) # seconds

df.avgPageLoadTime = df.avgPageLoadTime.map(round) # secondsAfter this step, it can be considered optional.

If we only work on Google Analytics data, we can easily filter the titles and page paths. If we need the Seach Console page data gathered from the site map, we should consider adding a new step to combine them with the previous data set. In the process, the page title and filtering are done after the tables are merged to avoid unnecessary repetitions.

Google Analytics will pass path and title value even if a page has 404 status code. If there is a 404 page, the header value generated by this page is sent. In this case, we can filter the titles of deleted and/or moved pages by values such as Page Not Found and remove these rows from the data frame using index values.

df.drop(df.index[df.pageTitle.str.contains('found|error|not set', case=False, regex=True)], axis=0, inplace=True)Of course, we can also include post, form, landing, product, and listing pages in this list. Again, let's take a look at the possible change in values.

df.describe()Visualizing The Data

There must have been quite a reduction in the number of observations in the data frame. We can now focus on specific situations. First, let's examine the distributions.

df.hist(figsize=(20, 10), grid = True, bins=25, color='#007bff', zorder=2, rwidth=0.9);

Next, let's create a boxplot plot to see the spread of the data around the median.

color = {

"boxes": "DarkGreen",

"whiskers": "DarkOrange",

"medians": "DarkBlue",

"caps": "Gray",

}

df.plot.box(vert=False, figsize=(20, 10), showmeans=True, color=color);

As you can see, there are outliers in almost all fields. Of course, we can detail the graph for each, look at the data closely and get clearer ideas.

df.avgPageLoadTime.plot.box(vert=False, figsize=(20,10), showmeans=True, color=color);The important thing is to make the pages that have received impressions but have a high load time and high bounce rate as close to the average as possible. For this, the first goal is to include outliers at least in the 1.5*(Q3-Q1) range.

df[

(df.bounceRate <= df.bounceRate.median()) &

(df.avgTimeOnPage <= df.avgTimeOnPage.median()) &

(df.avgSessionDuration <= df.avgSessionDuration.median()) &

(df.avgPageLoadTime <= df.avgPageLoadTime.median())

]| pagePath | pageTitle | bounceRate | pageViews | avgTimeOnPage | avgSessionDuration | avgPageLoadTime | |

|---|---|---|---|---|---|---|---|

| 925 | /tr/grav-markdown-icerik-yazimi | Grav Makdown İçerik Yazımı | 50 | 66 | 109 | 26 | 0 |

| 1260 | /tr/html5-api-kullanimlari-1 | HTML5 ve API Kullanımları | 48 | 35 | 130 | 17 | 0 |

| 1263 | /tr/mermaid-js | Mermaid JS Nedir? | 33 | 35 | 109 | 19 | 0 |

| 1392 | /tr/parse-urls-url-yapisi-cozumlemesi | URL Yapısı Çözümlemesi | 31 | 29 | 0 | 20 | 0 |

| 1401 | /tr/wodpress-grav-cms-gecisi | WordPress - GRAV CMS Geçişi | 0 | 29 | 37 | 0 | 0 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| 5879 | /tr/wordpress-posts-markdown | WordPress İçeriklerin Markdown Olarak Dönüştür... | 0 | 1 | 3 | 0 | 0 |

| 5886 | /tr/wp-ads-conversion-tracking | WordPress Eklentisi: 'WP-Ads Conversion Tracki... | 0 | 1 | 51 | 0 | 1 |

| 5888 | /tr/wp-cli-ile-post-regex-img-search | WP-Cli İle Post İçeriklerinde Arama İşlemi | 0 | 1 | 11 | 0 | 0 |

| 5891 | /tr/wp-cli-post-meta-thumbnail | WP-CLI Post ve Meta İle Tüm Postlara Aynı Görs... | 0 | 1 | 1 | 0 | 0 |

| 5894 | /tr/wp-cron-nedir-nasil-kullanilir | WP-Cron Nedir? Nasıl Kullanılır? | 0 | 1 | 6 | 0 | 0 |

| 345 rows × 7 columns |

We have a list of contents that fall under median in all cases. Regarding these contents, we can ask the following questions;

- How often is this content updated?

- Is the content accessible to search engines?

- Is there a technical problem that affects the visit?

- Isn't the content interesting? What is the relevant search volume for the content?

- Is the content not descriptive enough?

- What is the sorting range of the content? Is it far behind the competition?

Looking at some of the content, the search volumes are quite low, and the content is weak. So our starting point is now clear. Our priority is to enrich this content and enrich the topic in focus to match possible closely related queries. Only these contents can be copied as a separated data frame and continuously optimized depends on the change positivity.

While partitioning the operations related to the pages, we can also proceed through quantile slices. Ultimately, our goal is to share more interesting content and remove content that has low impressions, high bounce rates, and that visitors don't want to waste time on for some reason.

df.loc[df.avgPageLoadTime > df.avgPageLoadTime.quantile(.75)].sort_values(by=['avgPageLoadTime', 'bounceRate'], ascending=[False, False], axis=0)Yes, in the next step, what can be done depending on the technical performance of the pages, such as average page loading time, etc.

If necessary, after technical improvements are completed, we can track the performance changes for a short time to be sure all things ok. Probably some of these steps will continue iteratively, and some of them will become redundant over time.

You can also create a word cloud to evaluate word repetitions in the context of a general or just a specific metric (e.g., pageView). Of course, in such cases, if a suffix and prefix are used on the website, these must be cleaned before the beginning.

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

text = ' '.join(k for k in list(df.pageTitle))

stopwords = set(STOPWORDS)

stopwords.update(['ve','ile','user','behavior'])

wordcloud = WordCloud(max_font_size=50, max_words=100, background_color="white").generate(text)

plt.figure(figsize=(20,14), dpi=200)

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.tight_layout(pad=0)

plt.savefig('myfigure_200.png')

plt.show()Yes, that's all. We should not forget to choose a primary metric to test our hypotheses. Thus, we can be more sure of cause-effect relationships and get more testing ideas.